From Idea to Implementation: Crafting an AI Powered Email Assistant with Semantic Kernel and Neon Serverless

Εισαγωγή

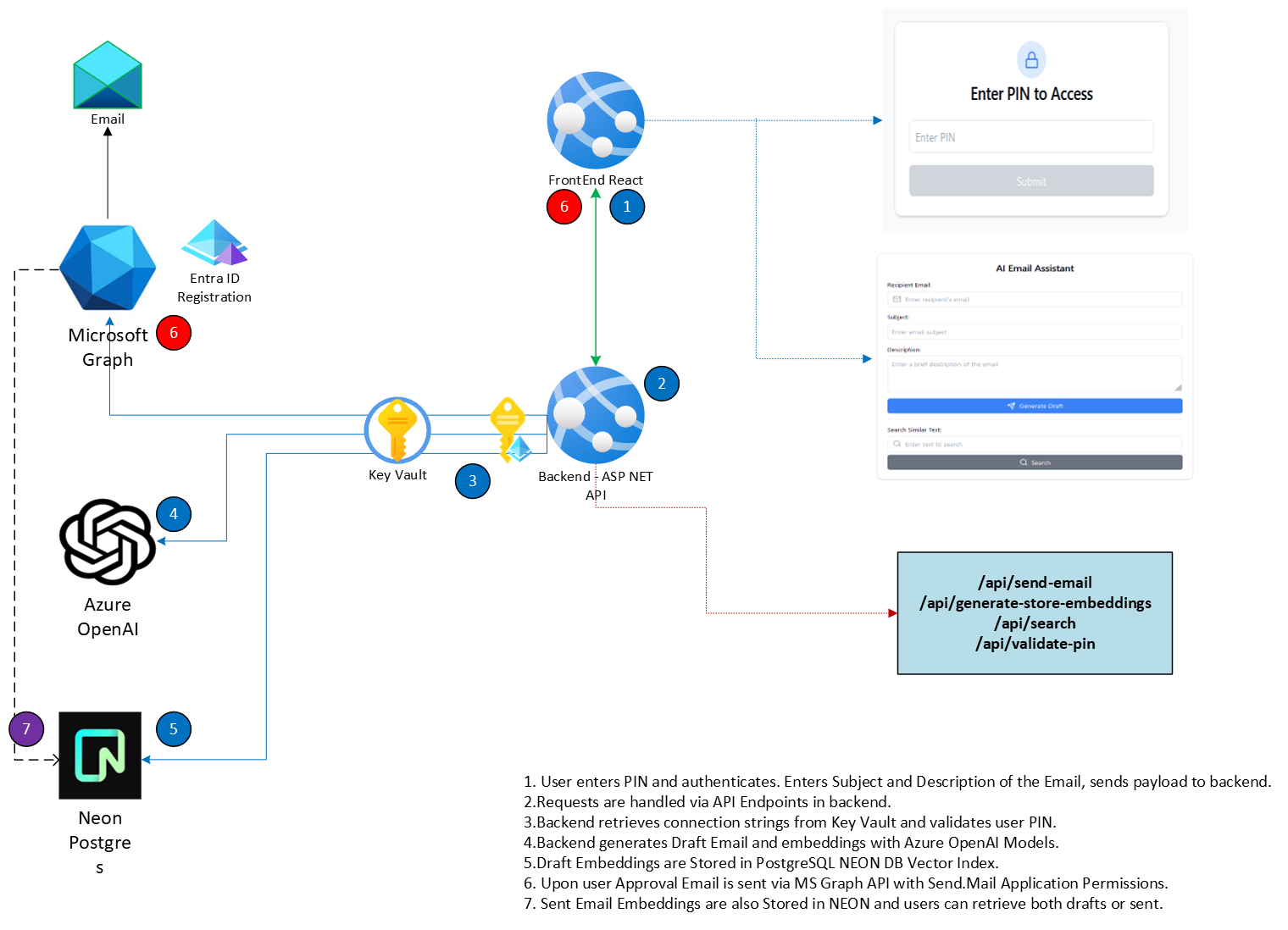

In the realm of Artificial Intelligence, crafting applications that seamlessly blend advanced capabilities with user-friendly design is no small feat. Today, we take you behind the scenes of building an AI Powered Email Assistant, a project that leverages Semantic Kernel for embedding generation and indexing, Neon PostgreSQL for vector storage, and the Azure OpenAI API for generative AI capabilities. This blog post is a practical guide to implementing a powerful AI-driven solution from scratch.

The Vision

Our AI Powered Email Assistant is designed to:

- Draft emails automatically using input prompts.

- Enable easy approval, editing, and sending via Microsoft Graph API.

- Create and store embeddings of the Draft and Send emails in NEON Serverless PostgreSQL DB.

- Provide a search feature to retrieve similar emails based on contextual embeddings.

This application combines cutting-edge AI technologies and modern web development practices, offering a seamless user experience for drafting and managing emails.

The Core Technologies of our AI Powered Email Assistant

1. Semantic Kernel

Semantic Kernel simplifies the integration of AI services into applications. It provides robust tools for text generation, embedding creation, and memory management. For our project, Semantic Kernel acts as the foundation for:

- Generating email drafts via Azure OpenAI.

- Creating embeddings for storing and retrieving contextual data.

2. Vector Indexing with Neon PostgreSQL

Neon, a serverless PostgreSQL solution, allows seamless storage and retrieval of embeddings using the pgvector extension. Its serverless nature ensures scalability and reliability, making it perfect for real-time AI applications.

3. Azure OpenAI API

With Azure OpenAI, the project harnesses models like gpt-4 και text-embedding-ada-002 for generative text and embedding creation. These APIs offer unparalleled flexibility and power for building AI-driven workflows.

How We Built our AI Powered Email Assistant

Step 1: Frontend – A React-Based Interface

The frontend, built in React, provides users with a sleek interface to:

- Input recipient details, subject, and email description.

- Generate email drafts with a single click.

- Approve, edit, and send emails directly.

We incorporated a loading spinner to enhance user feedback and search functionality for retrieving similar emails.

Key Features:

- State Management: For handling draft generation and email sending.

- API Integration: React fetch calls connect seamlessly to backend APIs.

- Dynamic UI: A real-time experience for generating and reviewing drafts.

Step 2: Backend – Semantic Kernel and Vector Storage

The backend, powered by ASP.NET Core, uses Semantic Kernel for AI services and Neon for vector indexing. Key backend components include:

Semantic Kernel Services:

- Text Embedding Generation: Uses Azure OpenAI’s

text-embedding-ada-002to create embeddings for email content. - Draft Generation: The AI Powered Email Assistant creates email drafts based on user inputs using Azure OpenAI gpt-4 model (OpenAI Skill).

public async Task<string> GenerateEmailDraftAsync(string subject, string description)

{

try

{

var chatCompletionService = _kernel.GetRequiredService<IChatCompletionService>();

var message = new ChatMessageContent(

AuthorRole.User,

$"Draft a professional email with the following details:\nSubject: {subject}\nDescription: {description}"

);

var result = await chatCompletionService.GetChatMessageContentAsync(message.Content ?? string.Empty);

return result?.Content ?? string.Empty;

}

catch (Exception ex)

{

throw new Exception($"Error generating email draft: {ex.Message}", ex);

}

}

}Vector Indexing with Neon:

- Embedding Storage: Stores embeddings in Neon using the

pgvectorextension. - Contextual Search: Retrieves similar emails by calculating vector similarity.

Email Sending via Microsoft Graph:

- Enables sending emails directly through an authenticated Microsoft Graph API integration.

Key Backend Features:

- Middleware for PIN Authentication: Adds a secure layer to ensure only authorized users access the application.

- CORS Policies: Allow safe fronted-backend communication.

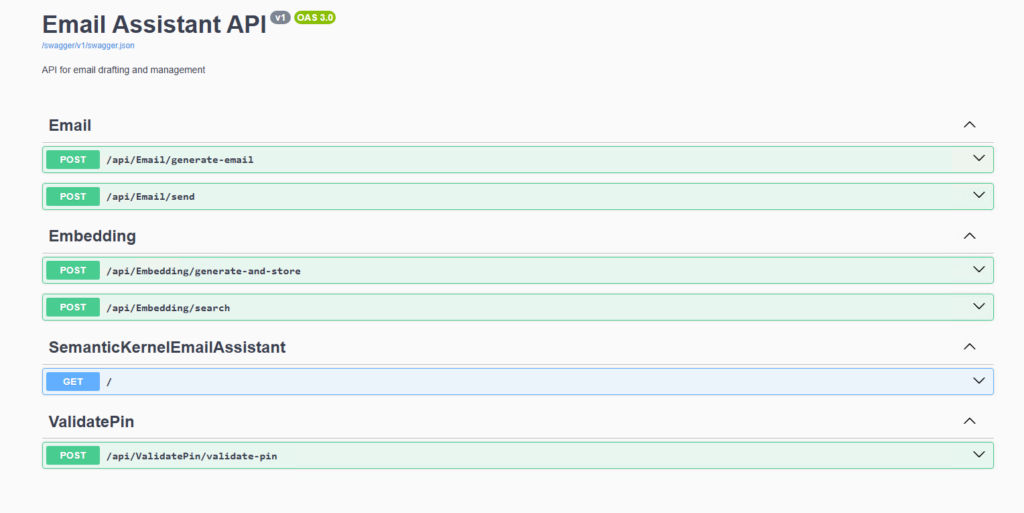

- Swagger Documentation: The Swagger Docs that simplify API testing during development.

Step 3: Integration with Neon

Το pgvector extension in Neon PostgreSQL facilitates efficient vector storage and similarity search. Here’s how we integrated Neon into the project:

- Table Design: A dedicated table for embeddings with columns for

subject,content,type,embedding, andcreated_at. Thetypecolumn can hold 2 values draft or sent in case the users want to explore previous unsent drafts. - Index Optimization: Optimizing the index can save us a lot of time and effort before facing performance issues with

CREATE INDEX ON embeddings USING ivfflat (embedding) WITH (lists = 100); - Search Implementation: Using SQL queries with vector operations to find the most relevant embeddings.

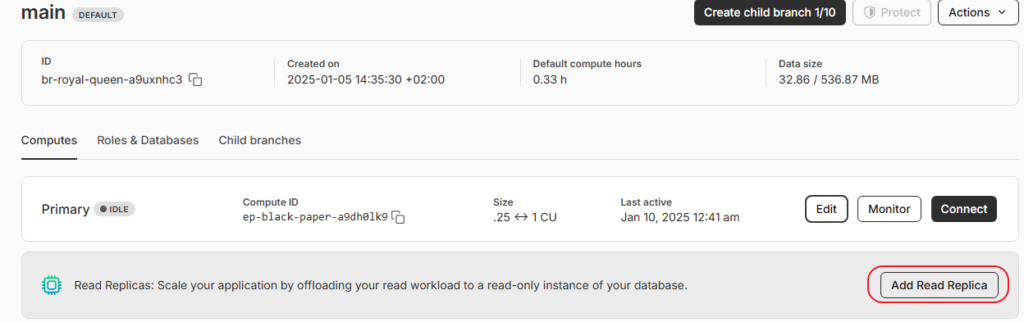

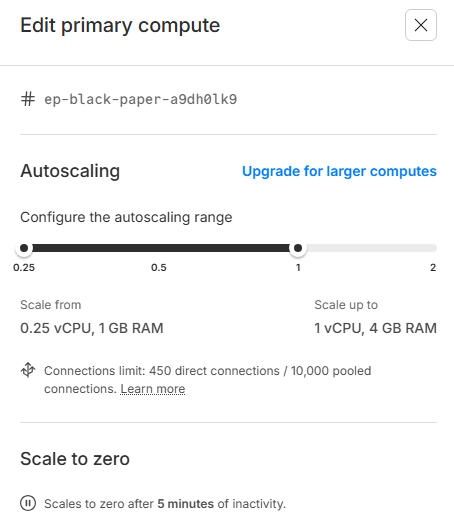

- Enhanced Serverless Out-Of-the-box: Even the free SKU offers Read Replica και Autoscaling making it Enterprise-ready.

Sample from the EmbeddingService.cs

public async Task<List<SearchResult>> SearchSimilarEmbeddingsAsync(List<float> queryEmbedding, int limit)

{

var results = new List<SearchResult>();

using var connection = new NpgsqlConnection(_connectionString);

await connection.OpenAsync();

var sql = @"

SELECT

input_text,

1 - (embedding <=> @queryEmbedding::vector) as similarity

FROM embeddings

ORDER BY embedding <=> @queryEmbedding::vector

LIMIT @limit";

Why This Approach Stands Out

- Efficiency: By storing embeddings instead of just raw data, the system maintains privacy while enabling rich contextual searches.

- Scalability: Leveraging Neon’s serverless capabilities ensures that the application can grow without bottlenecks. Autoscale is enabled

- User-Centric Design: The combination of React’s dynamic frontend and Semantic Kernel’s advanced AI delivers a polished user experience.

Step-by-Step Guide to create your own AI Powered Email Assistant

Find all required code in GitHub

Prerequisites

- Azure Account with OpenAI access

- Microsoft 365 Developer Account

- NEON PostgreSQL Account

- .NET 8 SDK

- Node.js and npm

- Visual Studio Code or Visual Studio 2022

Step 1: Setting Up Azure Resources

- Azure OpenAI Setup:

- Create an Azure OpenAI resource

- Deploy two models:

- GPT-4 for text generation

- text-embedding-ada-002 for embeddings

- Note down endpoint and API key

- Entra ID App Registration:

- Create new App Registration

- Required API Permissions:

- Microsoft Graph: Mail.Send (Application)

- Microsoft Graph: Mail.ReadWrite (Application)

- Generate Client Secret

- Note down Client ID and Tenant ID

Step 2: Database Setup

- NEON PostgreSQL:

- Create a new project

- Create database

- Enable pgvector extension

- Save connection string

Step 3: Backend Implementation (.NET)

Project Structure:

/Controllers

- EmailController.cs (handles email operations)

- HomeController.cs (root routing)

- VectorSearchController.cs (similarity search)

/Services

- EmailService.cs (Graph API integration)

- SemanticKernelService.cs (AI operations)

- VectorSearchService.cs (embedding operations)

- OpenAISkill.cs (email generation)Key Components:

- SemanticKernelService:

- Initializes Semantic Kernel

- Manages AI model connections

- Handles prompt engineering

- EmailService:

- Microsoft Graph API integration

- Email sending functionality

- Authentication management

- VectorSearchService:

- Generates embeddings

- Manages vector storage

- Performs similarity searches

Step 5: Configuration

- Create new dotnet project with:

dotnet new webapi -n SemanticKernelEmailAssistant - Configure appsettings.json for your Connections.

- Install Semantic Kernel ( Look into the SemanticKernelEmailAssistant.csproj for all packages and versions) – Versions are Important !

- When all of your files are complete, then you can execute:

dotnet build & dotnet publish -c Release - To test locally simply run

dotnet run

Step 5: React Frontend

- Start a new React App with:

npx create-react-app ai-email-assistant - Change directory in the newly created

- Copy all files from Git and run

npm install - Initialize Tailwind

npx tailwindcss init(if you see any related errors)

Step 6: Deploy to Azure

Both our Apps are Containerized with Docker, so pay attention to get the Dockerfile for each App.

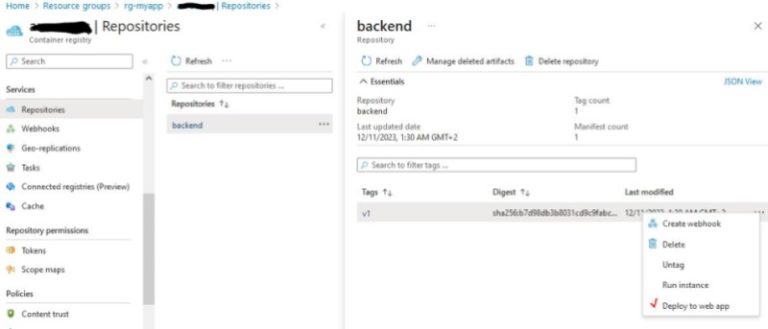

Use: [ docker build -t backend . ] and tag and push: [ docker tag backend {acrname}.azurecr.io/backend:v1 ] , [ docker push {acrname}.azurecr.io/backend:v1 ]. The same applies for the Frontend. Make sure to login to Azure Container Registry with: az acr login --name $(az acr list -g myresourcegroup --query "[].{name: name}" -o tsv) We will be able to see our new Repo on Azure Container Registry and deploy our Web Apps :

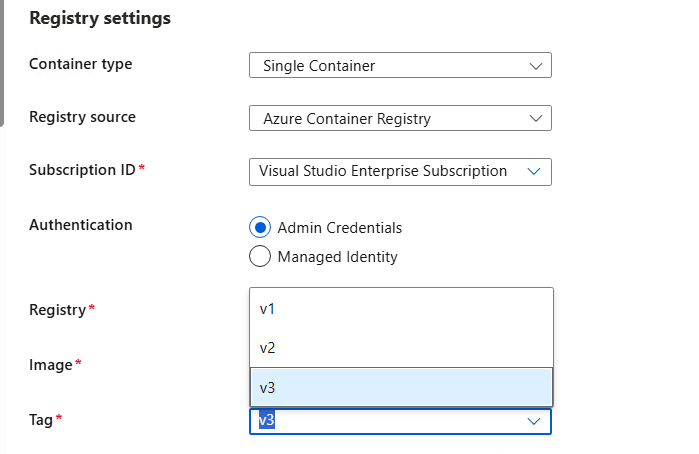

Tagging allows us to use a variety of deployments and select which version we prefer from the Deployment Center Menu of Azure Web Apps

Troubleshooting and Maintenance

- Backend Issues:

- Use Swagger (

/docs) for API testing and debugging. - Check Azure Key Vault for PIN and credential updates.

- Use Swagger (

- Embedding Errors:

- Ensure

pgvectoris correctly configured in Neon PostgreSQL. - Verify the Azure OpenAI API key and endpoint are correct.

- Ensure

- Frontend Errors:

- Use browser dev tools to debug fetch requests.

- Ensure environment variables are correctly set during build and runtime.

Κλείσιμο

In today’s rapidly evolving tech landscape, building an AI-powered application is no longer a daunting task, primarily thanks to groundbreaking technologies like Semantic Kernel, Neon PostgreSQL, and Azure OpenAI. Most importantly, this project clearly demonstrates how these powerful tools can seamlessly work together to deliver a robust, scalable, and user-friendly solution.

First and foremost, the integration of Semantic Kernel effectively streamlines AI orchestration and prompt management. Additionally, Neon PostgreSQL provides exceptional serverless database capabilities that automatically scale with your application’s needs. Furthermore, Azure OpenAI’s reliable API and advanced language models consistently ensure high-quality AI responses and content generation.

Moreover, whether you’re developing a customer service bot, content creation tool, or data analysis platform, this versatile technology stack offers the essential flexibility and power to bring your innovative ideas to life. Consequently, if you’re ready to create your own AI application, the powerful combination of Semantic Kernel and Neon serves as your ideal starting point. Above all, this robust foundation successfully balances sophisticated functionality with straightforward implementation, while simultaneously ensuring seamless scalability as your project continues to grow and evolve.

Αναφορές:

- Getting started with Semantic Kernel

- Semantic Kernel Components

- Generate Embeddings for Vector Store Connectors

- Text Embedding generation in Semantic Kernel

- NEON Project – Serverless PostgreSQL

- NEON – Home Page

- CloudBlogger – Semantic Kernel AI Career Coach