Multi Model Deployment with Azure AI Foundry Serverless, Python and Container Apps

Εισαγωγή

Azure AI Foundry is a comprehensive AI suite, with a vast set of serverless and managed models offerings designed to democratize AI deployment. Whether you’re running a small startup or an 500 enterprise, Azure AI Foundry provides the flexibility and scalability needed to implement and manage machine learning and AI models seamlessly. By leveraging Azure’s robust cloud infrastructure, you can focus on innovating and delivering value, while Azure takes care of the heavy lifting behind the scenes.

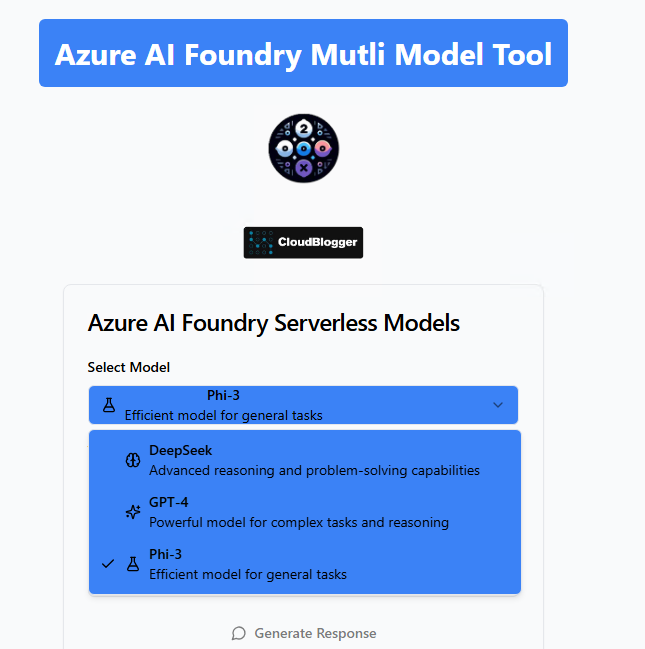

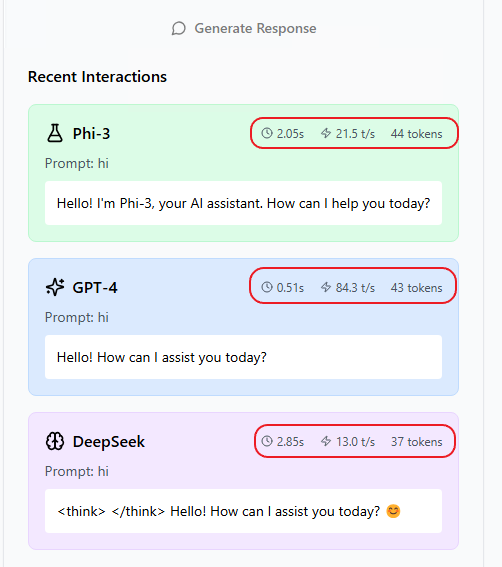

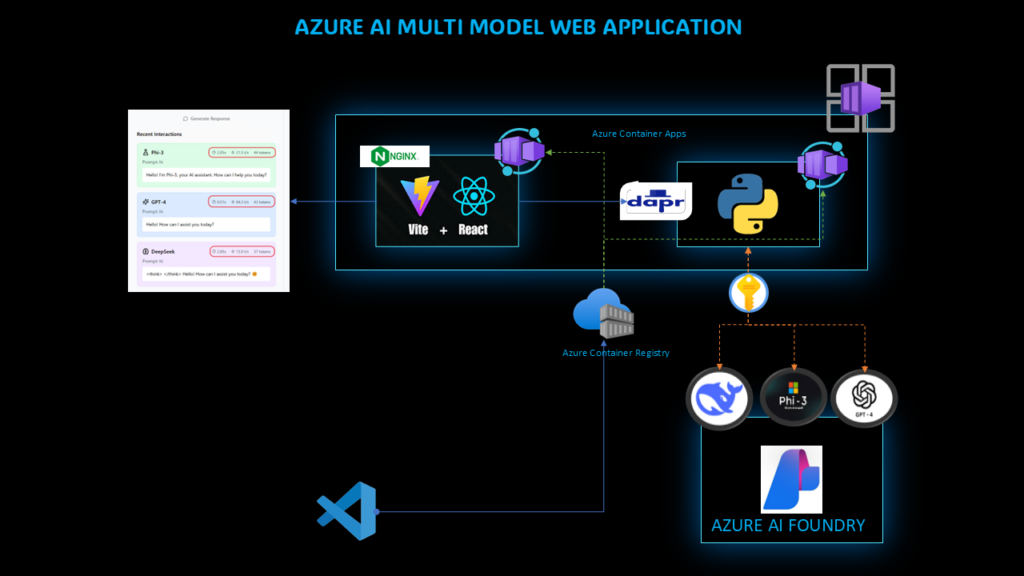

In this demonstration, we delve into building an Azure Container Apps stack. This innovative approach allows us to deploy a Web App that facilitates interaction with three powerful models: GPT-4, Deepseek, and PHI-3. Users can select from these models for Chat Completions, gaining invaluable insights into their actual performance, token consumption, and overall efficiency through real-time metrics. This deployment not only showcases the versatility and robustness of Azure AI Foundry but also provides a practical framework for businesses to observe and measure AI effectiveness, paving the way for data-driven decision-making and optimized AI solutions.

Azure AI Foundry: The evolution

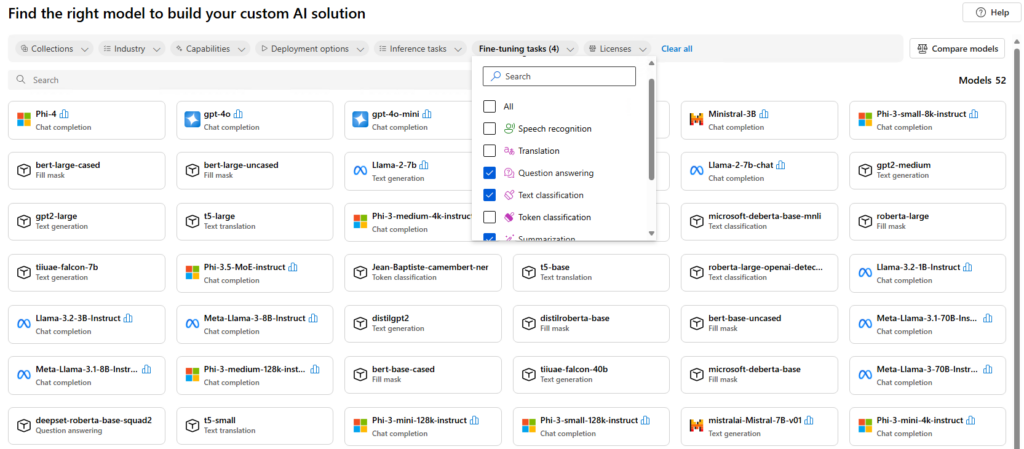

Azure AI Foundry represents the next evolution in Microsoft’s AI offerings, building on the success of Azure AI και Cognitive Services. This unified platform is designed to streamline the development, deployment, and management of AI solutions, providing developers and enterprises with a comprehensive suite of tools and services. With Azure AI Foundry, users gain access to a robust model catalog, collaborative GenAIOps tools, and enterprise-grade security features. The platform’s unified portal simplifies the AI development lifecycle, allowing seamless integration of various AI models and services. Azure AI Foundry offers the flexibility and scalability needed to bring your AI projects to life, with deep insights and fast adoption path for the users. The Model Catalog allows us to filter and compare models per our requirements and easily create deployments directly from the Interface.

Building the Application

Before describing the methodology and the process, we have to make sure our dependencies are in place. So let’s have a quick look on the prerequisites of our deployment.

Prerequisites

- Συνδρομής Azure

- Azure AI Foundry Hub with a project in East US. The models are all supported in East US.

- VSCode with the Azure Resources extension

There is no need to show the Azure Resources deployment steps, since there are numerous ways to do it and i have also showcased that in previous posts. In fact, it is a standard set of services to support our Micro-services Infrastructure:

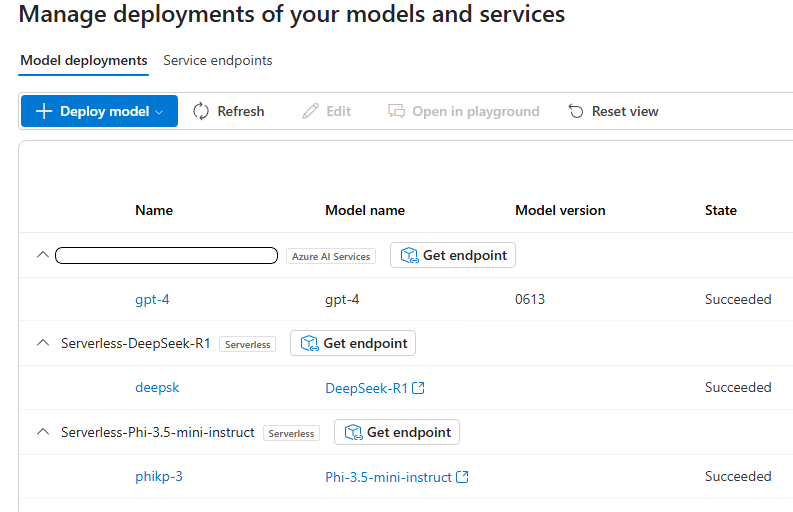

Azure Container Registry, Azure Key Vault, Azure User Assigned Managed identity, Azure Container Apps Environment and finally our Azure AI Foundry Model deployments.

Frontend – Vite + React + TS

The frontend is built using Vite and React and features a dropdown menu for model selection, a text area for user input, real-time response display, as well as loading states and error handling. Key considerations in the frontend implementation include the use of modern React patterns and hooks, ensuring a responsive design for various screen sizes, providing clear feedback for user interactions, and incorporating elegant error handling.

The current implementation allows us to switch models even after we have initiated a conversation and we can keep up to 5 messages as Chat History. The uniqueness of our frontend is the performance information we get for each response, with Tokens, Tokens per Second and Total Time.

Backend – Python + FastAPI

The backend is built with FastAPI and is responsible for model selection and configuration, integrating with Azure AI Foundry, processing requests and responses, and handling errors and validation. A directory structure as follows can help us organize our services and utilize the modular strengths of Python:

backend/

├── app/

│ ├── __init__.py

│ ├── main.py

│ ├── config.py

│ ├── api/

│ │ ├── __init__.py

│ │ └── routes.py

│ ├── models/

│ │ ├── __init__.py

│ │ └── request_models.py

│ └── services/

│ ├── __init__.py

│ └── azure_ai.py

├── run.py # For Local runs

├── Dockerfile

├── requirements.txt

└── .envAzure Container Apps

A powerful combination allows us to easily integrate both using Dapr, since it is natively supported and integrated in our Azure Container Apps.

try {

const response = await fetch('/api/v1/generate', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

model: selectedModel,

prompt: userInput,

parameters: {

temperature: 0.7,

max_tokens: 800

}

}),

});However we need to correctly configure NGINX to proxy the request to the Dapr Sidecar since we are using Container Images.

# API endpoints via Dapr

location /api/v1/ {

proxy_pass http://localhost:3500/v1.0/invoke/backend/method/api/v1/;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;Azure Key Vault

As always all our secret variables like the API Endpoints and the API Keys are stored in Key Vault. We create a Key Vault Client in our Backend and we call each key only the time we need it. That makes our deployment more secure and efficient.

Deployment Considerations

When deploying your application:

- Set up proper environment variables

- Configure CORS settings appropriately

- Implement monitoring and logging

- Set up appropriate scaling policies

Azure AI Foundry: Multi Model Architecture

The solution is built on Azure Container Apps for serverless scalability. The frontend and backend containers are hosted in Azure Container Registry and deployed to Container Apps with Dapr integration for service-to-service communication. Azure Key Vault manages sensitive configurations like API keys through a user-assigned Managed Identity. The backend connects to three Azure AI Foundry models (DeepSeek, GPT-4, and Phi-3), each with its own endpoint and configuration. This serverless architecture ensures high availability, secure secret management, and efficient model interaction while maintaining cost efficiency through consumption-based pricing.

Κλείσιμο

This Azure AI Foundry Models Demo showcases the power of serverless AI integration in modern web applications. By leveraging Azure Container Apps, Dapr, and Azure Key Vault, we’ve created a secure, scalable, and cost-effective solution for AI model comparison and interaction. The project demonstrates how different AI models can be effectively compared and utilized, providing insights into their unique strengths and performance characteristics. Whether you’re a developer exploring AI capabilities, an architect designing AI solutions, or a business evaluating AI models, this demo offers practical insights into Azure’s AI infrastructure and serverless computing potential.